Spark源码解读之spark-shell

依稀记得在刚开始学习spark框架的时候,第一次接触的就是spark-shell这个东西,但背后它究竟做了什么样的工作,今天来一探究竟:

- 打开spark-shell,实际上它是一个shell脚本,里面最核心的内容如下:

1 | function main() { |

我们可以看到,它调用了spark-submit ,并且以org.apache.spark.repl.Main为主类启动了一个spark job

- 打开spark-submit,它又是一个shell脚本,里面核心内容如下:

1 | exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@" |

我们可以看到,它又调用了spark-class ,并且以org.apache.spark.deploy.SparkSubmit为主类

- 打开spark-class,它又是个shell脚本

1 | build_command() { |

实际上spark-submit在调用spark-class之前给它增加了一个参数org.apache.spark.deploy.SparkSubmit,这就代表了最后spark-shell 启动了一个以org.apache.spark.deploy.SparkSubmit 为主类的jvm进程

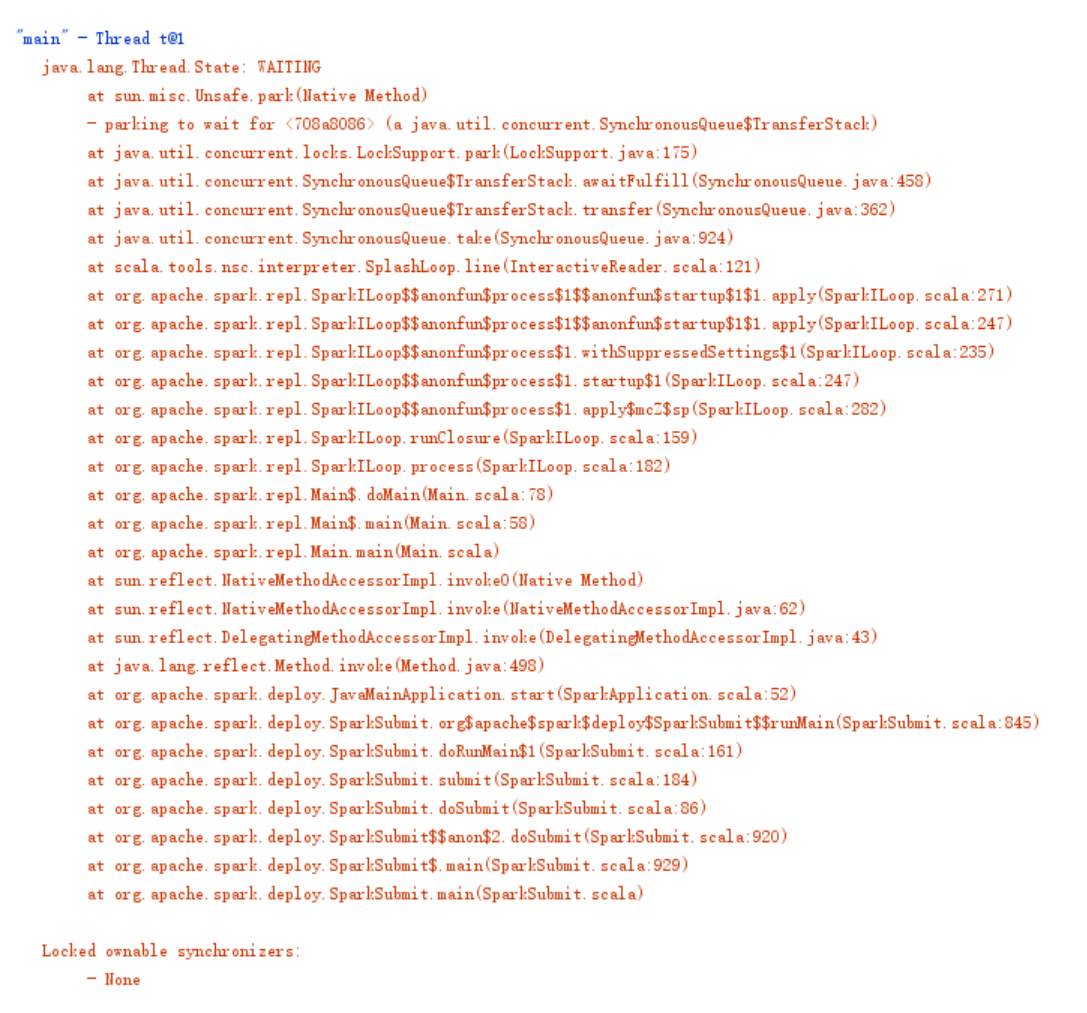

- 查看main线程的堆栈调用情况:

- 总结一下,spark-shell做了以下工作:

- 启动流程:spark-shell —> spark-submit -> spark-class

- 堆栈调用:spark框架启动了一个

org.apache.spark.repl.Main为主类的job,这个job由spark的org.apache.spark.deploy.SparkSubmit去开启,总结一下即为:org.apache.spark.deploy.SparkSubmit->org.apache.spark.repl.Main->org.apache.spark.repl.SparkILoop.process

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 tyrantlucifer!

评论